1/12/1996

ARDIS = Automatic Resume Deciphering Intelligence Software

![]()

ARGIS = Automatic Resume

Generating Intelligence Software

What are these Softwares ?

What will they do ?

How will they help us ?

How will they help our Client /

Candidates ?

ARDIS

-

This software will breakup / dissect a Resume

into its different Constituents, such as

a.

Physical information (data) about a candidate

(Executive)

b.

Academic information (data) about a candidate

(Executive)

c.

Employment Record (Industry-Function – Products/Services

wise)

d.

Salary

e.

Achievements / Contributions

f.

Attitudes / Attributes / Skills / Knowledge

g.

His preferences w.r.t Industry/Function/Location

In fact, if every candidate was to

fill-in our EDS, the info would automatically fall into “proper” slots/fields

since our EDS forces a candidate to “dissect” himself into various

compartments.

But,

Getting every applicant/executive

to fill-in our standardised EDS is next to impossible – and may not be even

necessary. Executives (who have already spent a lot of time and energy

preparing/typing their biodatas) are most reluctant to Sit-down once more and

spend a lot of time once again to furnish us the SAME information/data

in our neatly arranged block of EDS. For them, this duplication is a WASTE OF

TIME ! EDS is designed for our (information – handling / processing /

retrieving ) convenience and that is the way he perceives it ! Even if he is

vaguely conscious that this (filling in of EDS) would help him in the

long-run, he does not see any immediate benefit form filling this –

hence reluctant to do so.

We too have a problem – a “Cost /

Time / Effort”

If we are receiving 100 biodatas

each day (this should happen soon), whom to send our EDS and whom NOT to ?

This can be decided only by a

Senior Executive/Consultants who goes thru each & every biodata daily and

reaches a Conclusion as to

-

Which resumes are of “interest” & need

sending an EDS

-

Which resumes are margined or not-of-immediate

interest, where we need not spend time/money / energy of sending an EDS.

We may not be able to employ a

number of Senior/Competent Consultants who can scrutinise all incoming

bio-datas and take this decision on a DAILY basis ! This, itself would be a

costly proposition.

SO,

On ONE HAND

-

We have time/cost/energy/effort of sending EDS

to everyone

On OTHER HAND

-

We have time/cost of Several Senior Consultants

to Separate out “chaffe” from “wheat”.

NEITHER IS

DESIRABLE.

But

From each biodata received daily,

we still need to decipher and drop into relevant slots/fields, relevant

data/information

![]() OUR REQUIREMENT

NEEDS

OUR REQUIREMENT

NEEDS

-

Match a candidate’s profile with “Client

Requirement Profile” against specific request

-

Match a candidate’s profile against hundreds of

recruitment advertisements appearing daily in media (Job BBS.)

-

Match a candidate’s profile against “specific

vacancies” that any corporation (client or not) may “post” on our vacancy

bulletin-board (unadvertised vacancies).

-

Match a candidate’s profile against “Most likely

Companies who are likely to hire/need such an executive”, using our CORPORATE

DATA BASE, which will contain info such as

PRODUCTS

/ SERVICES of each & every Company

-

Convert each biodata received into a

RECONSTITUTED BIO-DATA (converted bio-data), to enable us to Send it out to any

client/Non-client organisation at the click of a mouse.

-

Generate (for commercial/profitable

exploitation) Such bye-product Services as

·

Compensation Trends

·

Organisation Charts

·

Job Descriptions etc. etc.

-

Permit a candidate to log-into our database and

remotely modify/alter his bio-data

-

Permit a client (or a non-client) to log into

our database and remotely conduct a SEARCH.

ARDIS is required on the assumption that far a long

time to come, “typed” bio-datas would form a major source of our database.

Other Sources, such as

-

Duly filled-in EDS. (hard-copy)

-

EDS on a floppy

-

Downloading EDS over internet (or Dial-up phone

lines) & uploading after filling-in (like Intellimatch)

Will continue to play a minor

role in foreseeable future.

![]()

![]() HOW WILL ARDIS

WORK?

KEY-WORDS

HOW WILL ARDIS

WORK?

KEY-WORDS

TO recapitulate

ARDIS will,

-

Recognise “characters”

-

Convert to “WORDS”

-

Compare with 6258 Key-w which we have

found in 3500 converted bio-datas (using ISYS). If a “word” has not already

appeared (< 10 times) in these 3500 bio-datas, then its chance (probability)

of occurring in the next biodata is very very small indeed.

BUT even then,

Ardis Software will store in memory, e ach “occurrence” of

each word (old or new, first time or a thousand time)

And

will continuously calculate its “probability of occurrence”

as

P= No. of occurrence of the

given word sofar

Total no. of

occurrence of all the words in the entire population sofar

So that,

-

By the time we have SCANNED, 10,000 bio-datas,

we would have literally covered all words that have, even a small PROBABILITY

of OCCURRENCE !

So with each new bio-data “Scanned” the probability of

occurrence of each “word” is getting more & more accurate !

Same logic will hold far

-

KEY PHRASES

-

KEY SENTENCES

The “name of the game” is

-

PROBABILITY OF OCCURRENCE

AS Someone once said,

If you allow 1000 monkeys to keep on hammering keys of 1000 typewriters

for 1000 years, you will at the end, find that between them, they have

reproduced’ the entire literary-works of Shakespeare!

But to-day, if you store into a Super-Computer,

-

All the words appearing in English language

(incl.-verbs/adverbs/adj. etc.)

-

The “logic” behind construction of English

language

Then,

I am sure

the Super-Computer could reproduce the entire works of Shakespeare in 3 months

!

And, as you would have noticed, ARDIS is a Self-learning

type of software. The more it reads (Scans), the more it learns (memorises,

words, phrases & even sentences).

Because of its SELF_LEARNING / SELF-CORRECTING/

SELF-IMPROVING Capability,

ARDIS gets better & better equipped, to detect, in a

scanned biodata

-

![]() Spelling mistakes (Wrong Word)

Spelling mistakes (Wrong Word)

-

Context “ (Wrong

prefix or Suffix) – wrong PHRASE

-

Preposition “ (Wrong Phrase)

-

Adverb/Verb “ - Wrong Sentence

With minor variations,

All thoughts, words (written), Speech (Spoken) and actions,

keep on repeating again and again and again.

It is this REPETITIVENESS of words, phrases & Sentences

in Resume’s that we plan to exploit.

In fact,

By examining & memorising the several hundred (or

thousand) “Sequences” in which the words appear, it should be possible to

“Construct” the “grammar” i.e. the logic behind the Sequences. I Suppose, this

is the manner in which the experts were able to unravel the “meaning” of

hierographic inscriptions on Egyption

How to build directions of “phrases” ?

From 6252 words, let us pick any word, Say

|

Now we ask the Software to scan the directory containing

3500 converted bio-datas, with instruction that every time the word

“Achievement” is spotted, the software will immediately spot/record the prefix,

The software will record all the words that appeared before “Achievement” as

also the “number of times” each of this prefix appeared.

|

|||

|

|||

e.g.

1. Major 10 10/55 =

2.

Minor 9 9/55/ =

3.

Significant 8 8/55/ =

4. Relevant 7 7/55 =

5. True 6

6. Factual 5

7. My 4

8. Typical 3

9. Collective 2

10. Approximate 1

![]()

![]()

![]()

Total

no. of = 55 = 1.0000

Occurrence

As more & more bio-datas are

Scanned”

-

The number of “prefixes” will go on increasing

-

The number of “occurrences” of each prefix will

also go on increasing

-

The overall “population-size” will also go on

increasing

-

The “probability of occurrence” of each prefix

will go on getting more & more accurate i.e. more & more

representative.

This process can go on & on (as long as we keep on

scanning bio-datas). But “accuracy-improvements” will decline/taper-off, once a

sufficiently large number of prefixes (to the word “ACHIEVEMENT”, have been

accumulated. Saturation takes place.

The whole process can be repeated with the words that appear

as SUFFIXES” to the word ACHIEVEMENT, and the probability of occurrence of each

Suffix also determined.

1.

Attained 20 20/54 =

2.

Reached 15 15/54 =

3.

Planned 10 10/54 =

4.

Targetted 5

5.

Arrived 3

6.

![]()

![]() Recorded 1

Recorded 1

54 1.000

(population-size of all the occurrences)

Having figured – out the

“probabilities of occurrences” of each of the prefixes and each of Suffixes (

to a given word – in this case “ACHIEVEMENT”), we could next tackle the issue

of “a given combination of prefix & suffix”

e.g. What is the probability of

-

![]()

![]() “major” ACHIEVEMENT

“attained” -------

“major” ACHIEVEMENT

“attained” -------

Prefix suffix.

What is all of this statistical exercise

required ?

It we wish to stop at merely deciphering

a resume, then I don’t think we need to go thru this.

For mere “deciphering”, all we

need is to create a

KNOWLEDGE-BASE

of

-

Skills - Functions

-

Knowledge - Edu-Qualifications

-

Attitudes - Products / Services

-

Attributes - Names

-

Industries

-

Companies

etc.

etc.

Having created the knowledge-base,

simply scan a bio-data, recognise words, compare with the words contained in

the knowledge-base, find CORRESPONDENCE / EQUIVALENCE and allot/file each

scanned word into respective “fields” against each PEN (Permanent Executive

No.)

PRESTO !

You have dissected & stored

the MAN in appropriate boxes.

Our EDS has these “boxes”. Problem

is manual data-entry The D/E operator,

-

Searches appropriate “word” from appropriate

“EDS Box” and transfers to appropriate Screen.

![]() To eliminate this

manual (time-Consuming operation) we need ARDIS.

To eliminate this

manual (time-Consuming operation) we need ARDIS.

We already have a DATA-BASE of

6500 words.

All we need to do, is to write

down against each word, whether it is a

-

Skill

-

Attribute

-

Knowledge - Location

-

Edu. -

Industry

-

Product - Function

-

Company etc. etc.

The moment we do this, what was a

mere “data-base” becomes a “KNOWLEDGE-BASE”, ready to serve as a “COMPARATOR”.

And as each new bio-data is

scanned, it will throw-up words for which there is no “Clue”. Each such new

word will have to be manually “categorised” and added to the Knowledge-base.

Then what is the advantage of calculating

for - each word

- each prefix

-

each suffix

-

each phrase

-

each sentence

Its probability of occurrence?

The advantages are:

# 1 – Detect “unlikely” prefix/suffix

Suppose ARDIS detects

“Manor Achievement”

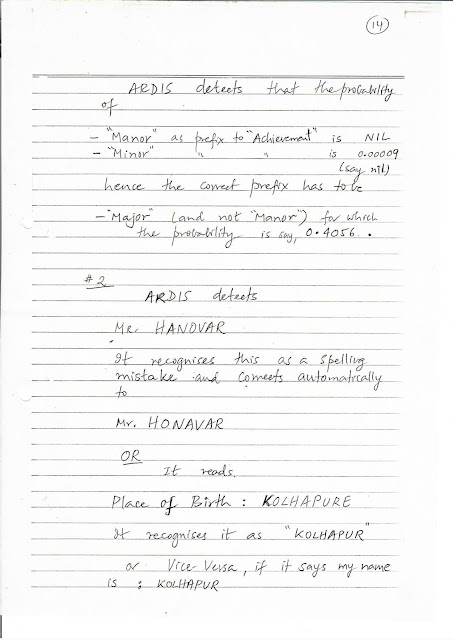

ARDIS detects that the probability

of

-

“Manor” as prefix to “Achievement” is NIL

-

“Manor” as prefix to “Achievement” is 0.00009 (Say

nil)

Hence the correct prefix has to be

-

“Major” (and not “Manor”) for which the

probability is say, 0.4056.

# 2

ARDIS

detects

MR. HANOVAR

It recognises this as a spelling

mistake and corrects automatically to

Mr. HONAVAR

OR

It reads.

Place of Birth: KOLHAPUR

It recognises it as “KOLHAPUR” or vice

versa, if it says my name is: KOLHAPUR

# 3

Today, while scanning (using OCR),

When a mistake is detected, it gets highlighted on the screen or an asterisk

/underline starts blinking.

This draws the attention of the

operator who manually corrects the “mistake” after consulting a dictionary or

his own knowledge-base.

Once ARDIS has calculated the

probabilities of Lakhs of words and even the probabilities of their “most

likely sequence of occurrences”, then, hopefully the OCR can Self-Correct any word

or phrase without operator intervention.

So, the Scanning accuracy of OCR

should eventually become 100% - and not 75% - 85% as at present.

# 4

Eventually, we want that

-

![]() a bio-data is

Scanned

a bio-data is

Scanned

and automatically

-

reconstitutes itself into our converted BIO DATA

FORMAT.

This is the concept of ARGIS

(automatic resume generating intelligence Software)

Here again the idea is to

eliminate the manual data-entry of the entire biodata – our ultimate goal.

But ARGIS is not possible without

first installing ARDIS and that too with the calculation of the “probability of

occurrence” as the main feature of the Software.

By studying & memorising &

calculating the “probabilities of occurrences of Lakhs of words/phrase/

sentences, ARDIS actually learns English grammar thru “frequency of

usage”.

And it is this KNOWLEDGE-BASE

which enable ARGIS to reconstitute a bio-data (in our format) in a

GRAMMATICALLY CORRECT WAY.

1/12/1996