05/09/2003

– 17/09/2003

Kartavya

/ Abhi / Sanjeev

Self-Learning

Software

How

does a one year old child learn to differentiate between colours Red &

Blue, and beyond that between different shades of Red?

This

is another way of asking

“How

does Learning take place? What steps are involved in the learning process? “

There

are no fool proof / ironclad / undisputed scientific theories. But the

empirical evidence leads us to believe that the process (of learning), occurs,

somewhat as follows:

A

mother points a finger at a colour and speaks aloud “RED”. The sound is

captured by the child & stored in his memory.

This

process is repeated a thousand times and with each repetition, the memory gets

etched, deeper & deeper.

An

“association “develop between the colour & the sound.

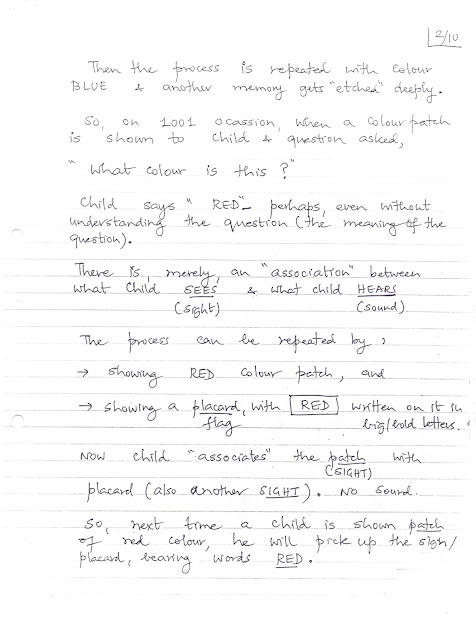

Then

the process is repeated with colour BLUE & another memory gets “etched

“deeply.

So,

on 1001 occasion, when a colour patch is shown to child & question asked,

“What

colour is this? “

Child

says “RED “perhaps, even without understanding the question (then meaning of

the question).

There

is, merely, an “association “between what child SEES (sight) & what child

HEARS (sound)

The

process can be repeated by,

Ø Showing

RED colour patch , and

Ø Showing

a placard (flag), with RED written on it in big / bold Letters.

Now

child “associates “the patch (SIGHT) with placard (also another SIGHT). No

Sound.

So,

next time a child is shown patch of red colour, he will pick up the sign /

placard, learning word RED.

Input

(Sound)

→

Brain → Association / Memory (stored)

Input

(Photo) (SIGHT)

→

Brain → Association / Memory (stored)

So,

next time, what happens?

(First

Diagram)

Input

(Red patch) (SIGHT) → Brain ← Input (Recall from Memory / compare /

database search)*

→ Output (SOUND) → “RED”

OR

(Second

Diagram)

Input

(SIGHT – i.e., RED patch) → Brain ← Input (SIGHT – Recall from Memory /

database – words “RED” & match letters)

→

Output (SIGHT) → Pickup flag bearing letters “RED”

Remember

that two MAIN inputs to a brain are

Ø Sight

( Eyes) ----- 80% of learning takes place here

Ø Sound

( Ears ) --- 10% of learning takes place here

Of

course, there are other, relatively minor inputs of

Ø Touch

/ Feel ( Skin )

Balance 10% of learning

Ø Smell

(

Nose)

takes place thru this

Ø Taste

(

Tongue)

INPUT – DEVICES

In

the examples listed earlier, MOTHER acts as a human expert, who initiates the

learning – process by establishing “references / the bench-marks.”

In

essence, she uses the process (of showing patch & speaking aloud or showing

patch & showing placard), to transmit her OWN EXPERT KNOWLEDGE to

the child.

So,

all knowledge flows events from a GURU!

You

can even watch events & learn – without a single word

being uttered!

You

can close your eyes & listen to music & learn –

without seeing who is singing!

Then

there was Beethoven who was deaf but composed great symphonies

which he himself, could not hear! But this is an exception.

What

is the relevance of all this to “self-Learning Software?”

Simple,

If

we want to develop a software which can identify / categories a “resume”, as

belonging to

VB C++ etc…..

Then

all we need, is to “show” to the software, 1000 resumes and speak aloud,

C++ !

Then

1001st time, when the software “sees” a similar resumes, it

will speak-out loudly

C++

!

So,

first of all, we need a human expert – a GURU, who, after reading each resume,

shouts

C++

or VB or

ASP

etc. etc……..

When

Guru has accurately identified segregated 1000 resumes each of C++ etc…..

We

take those sub-sets & index their Keywords, calculate “frequency of

occurrence “of each of those keywords & assign them “weightages”

(probabilities).

Then

we plot the graphs for each subset (I .e. each “skill”)

Then,

when we present to this software any / next resume, it would try to find the

keywords. Let us say, it found 40 keywords. Now let us compare these 40

keyword-set, with

Ø VB

Keyword-set

Ø C++

Keyword-set

Ø ASP

Keyword-set

&

see what happens

First

Scenario (First Match)

(simple

Venn-diagram illustration)

New Resume ∩ VB Keyword Set

→

only 10% match

Second Match

(Venn

diagram labeled “New Resume” and “C++ Keyword Set” — overlap about 30%)

→ 30% match

Third

Match

(Venn

diagram labeled “New Resume” and “ASP Keyword Set” — overlap about 50%)

→ 50% match

We

( i.e. software ) has to keep repeating this “ match-making” exercise for a new

resume, with

ALL THE KEYWORDS – SETS

Till

it find the highest/ best match.

BINGO

The

new resume belongs to an “ASP” guy!

(Self-learning

Software – cont.)

That

way the FIRST METHOD, where a human expert reads thru 30000 resumes & then

regroups these into smaller sub-sets of 1000 resumes-each belonging to

different “skill-sets”

This

will be a very slow method!

SECOND

METHOD

Here,

instead of a (one) expert going thru 30000 resumes, we employee 30000 experts

the jobseekers themselves!

Obviously,

this METHOD would be very fast!

Underlying

premises is this.

No

one knows better than the jobseeker himself, as to what precisely is his CORE

AREA OF COMPETENCE / SKILL.

Is

my skill

· VB

· C

++

· ASP

· .Net

So,

if I have identified myself, as belonging to VB OR C++ OR ASP

etc. etc….

Then

you better believe it!

Now,

all that we need to do, is to take 1000 resumes of all those guys who call

themselves

VB

And

find “keywords” from their resumes (& of course, weightages)

If

there are job sets where software guys are required to identify themselves by

their “ skills”, then best course would be to search resumes on these jobsites

by skills,

Then

download the search-result resumes! Repeat this search/download exercise for

each “skill” for which we want to develop “skill – graphs”

This

approach is fairly simple and perhaps, more accurate too.

But,

Ø We

have to find such jobsites & then satisfy ourselves that “ Skill-wise”

searching of resumes ( and downloading too ) is Possible

Ø Then

Subscribe for 1 Month / 3 Month, by paying Rs.20000/40000! There is a cost

factor, here

THIRD

METHOD

We

have, already downloaded from various jobsites 150000 job advts. For each of

these we know the “Actual Designation / Vacancy-Name/Position” (thru Auto –

converter)

We

can re-group these advts. According to identical / similar vacancy names /

actual design where we finish, we may get, against each specific “Vacancy –

Name’

500

to 5000 Job- advts.

Call

each a sub- set (Vacancy-Name-wise)

Then

index keywords of each subset & calculate frequency –of-usages (weightage).

So,

now, we have Profile-Graphs, which are not skill-wise, but which are

“Vacancy-Name” wise!

This

can be done real fast & cheap! And, may suffice Software Companies’ BROADER

needs, A quick beginning can be made & Result shown within a week!!!

Kartavya

/ Abhi

24-03-03

(1/2)

Self-Learning

Software

Our

goal is to make ResuMine / ResuSearch self-learn and improve with usage.

One

way is for them to capture the knowledge of hundreds of expert users.

One

opportunity (for self-learning) is to study the “editing” of structured

database fields by subscribers.

When

subscribers find a value missing in any field, they would (hopefully) try to go

through the entire text of the email resume to find that missing value.

And

if it does exist (but ResuMine somehow missed it), there is a good

chance that the subscriber would find it, if he has the patience — and need

— to capture that value.

Having

found the value, he would “insert” it in the appropriate field, via drag &

drop or highlight + click (or thru whatever method we specify).

Here

is our opportunity to learn.

When

any subscriber carries out any such editing, we must capture:

- In

what field he inserted the value

- Where

exactly in the resume he found the value

And

we must aggregate all such “editing” instances.

If

we do, the following type of scenario might emerge:

Say,

“Edn.” (Level-Branch-Degree-Institution) was found missing in 10,000 resume

extractions.

When

subscribers search for “Edn.” value in resumes, they may find the missing value

in 8,000 cases and enter it.

Now,

if our software tracks these 8,000 cases, it may discover that in 6,000 (out of

8,000) cases, subscribers located/picked up the value in ‘Personal Detail

Block 1’.

Whereas,

the logic we have used looks for “Edn.” in ‘Edu Qualification Block 1’.

Such

“discoveries” add to our learning and improve our extraction logic.

In

essence, we must offer the “Edit” feature free to all subscribers (since

we cannot guarantee 100% extraction accuracy) — and encourage them to edit.

Since

ResuMine is not a network-based software, a human developer would be

required to write the code after studying the patterns (of locating

missing values) that emerge from aggregation of edits.

(signature

mark)

No comments:

Post a Comment